The Gesture Control System introduces an AI-driven, touch-free interaction model for the healthcare industry. By utilizing computer vision and machine learning, the system recognizes and interprets specific hand gestures using a webcam, eliminating the need for a mouse or keyboard.

This system aims to:

* Minimize physical contact with devices in sterile medical environments.

* Prevent cross-infections by reducing the need for touch-based interaction.

* Improve workflow efficiency for healthcare practitioners.

* Provide an affordable and accurate alternative to existing motion-sensing devices like Kinect.

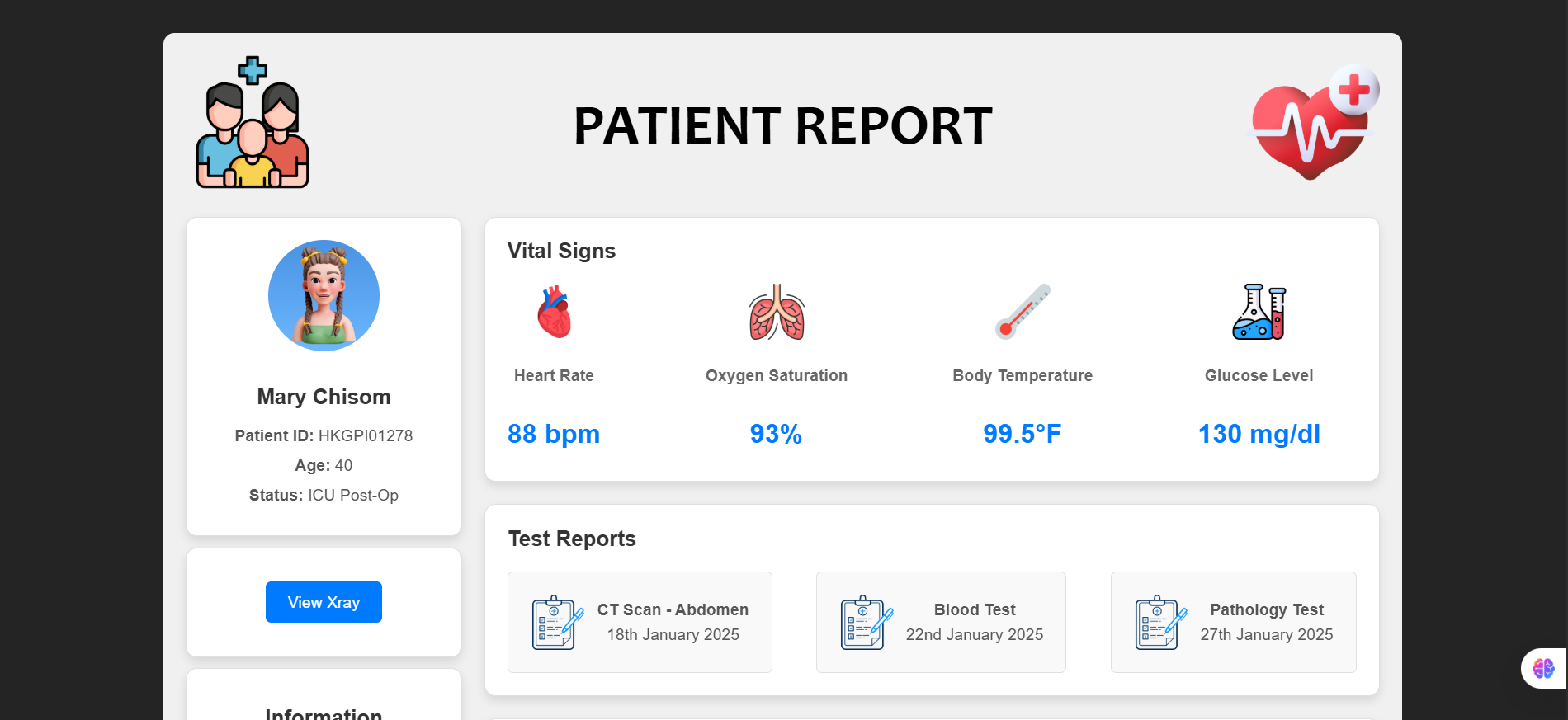

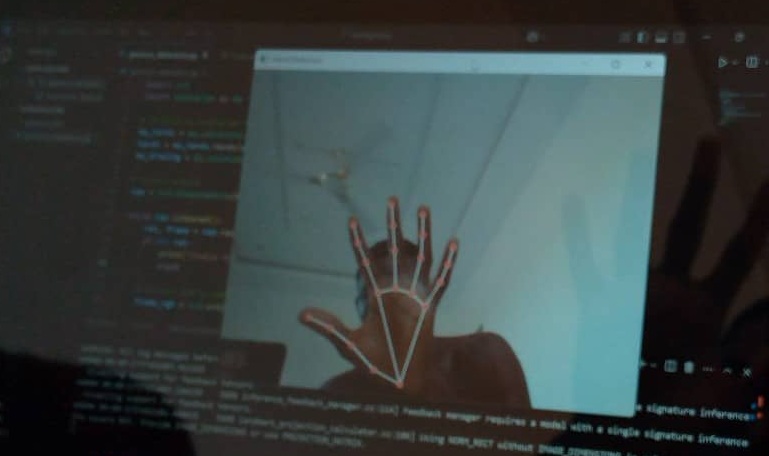

By leveraging React for the frontend, Python for AI processing, and MediaPipe for hand gesture recognition, this project brings real-time, low-cost, and efficient gesture-based control to healthcare applications.

THE STORY

Traditional touch-based interfaces in healthcare settings pose significant hygiene risks, making gesture-based control a crucial innovation for the industry. While existing motion-sensing solutions like Kinect are either too expensive or lack precision for real-world medical applications, this project was developed as a cost-effective and reliable alternative.

The core challenge was to design a system that:

* Recognizes and interprets essential hand gestures accurately.

* Operates with minimal computational power while maintaining speed and efficiency.

* Is easily integrated into existing medical workflows.

With these goals in mind, the Gesture Control System was designed to support five essential gestures, ensuring practicality and ease of use in healthcare settings.

OUR APPROACH

1. Initial Planning

A structured plan was developed to define:

* Gesture recognition logic and machine learning models.

* Technical specifications for integrating AI-driven hand tracking.

* Healthcare-focused UI/UX design considerations.

2. Wireframing & Layout

Wireframes were created to map out:

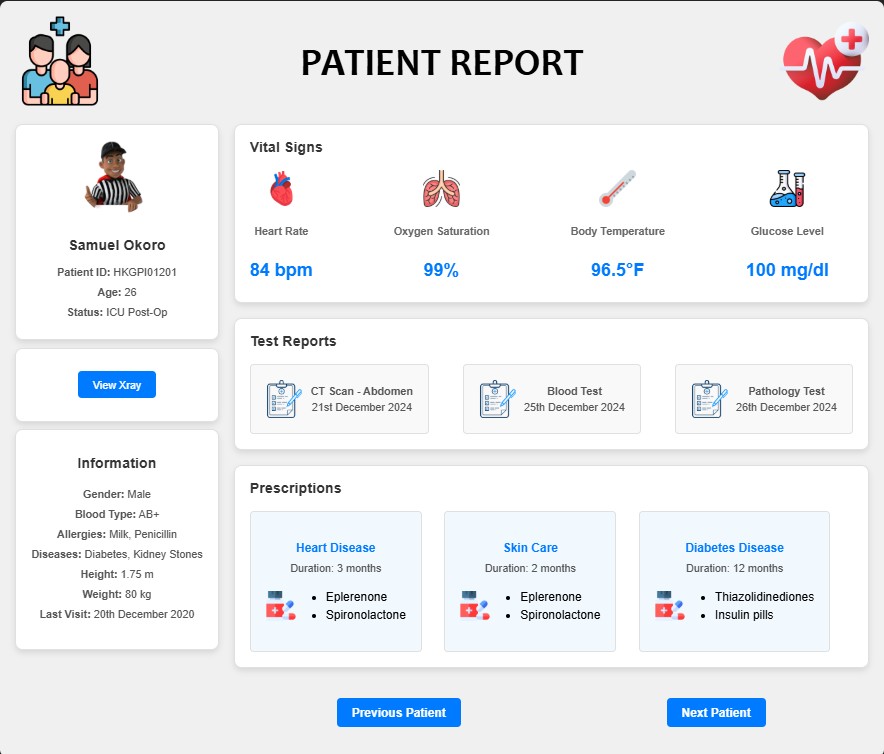

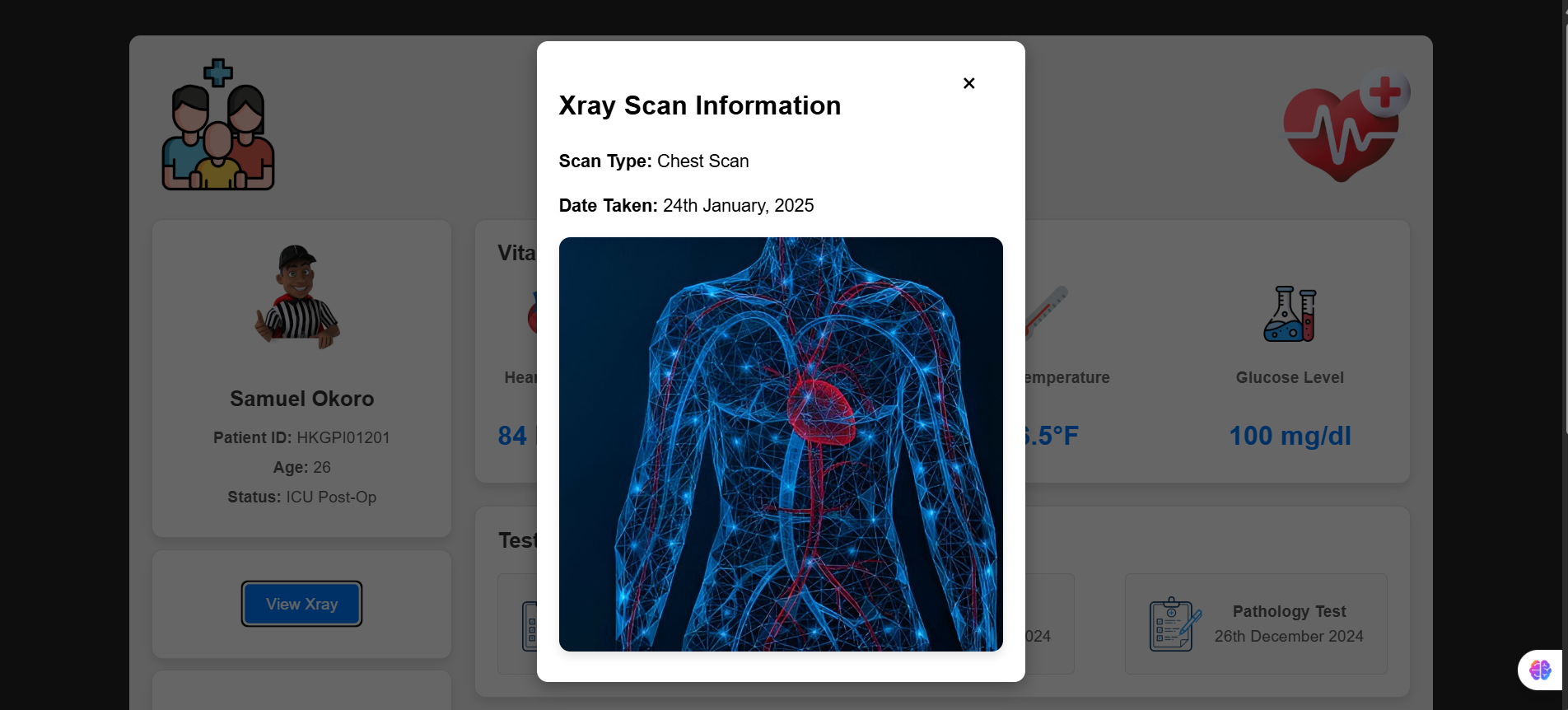

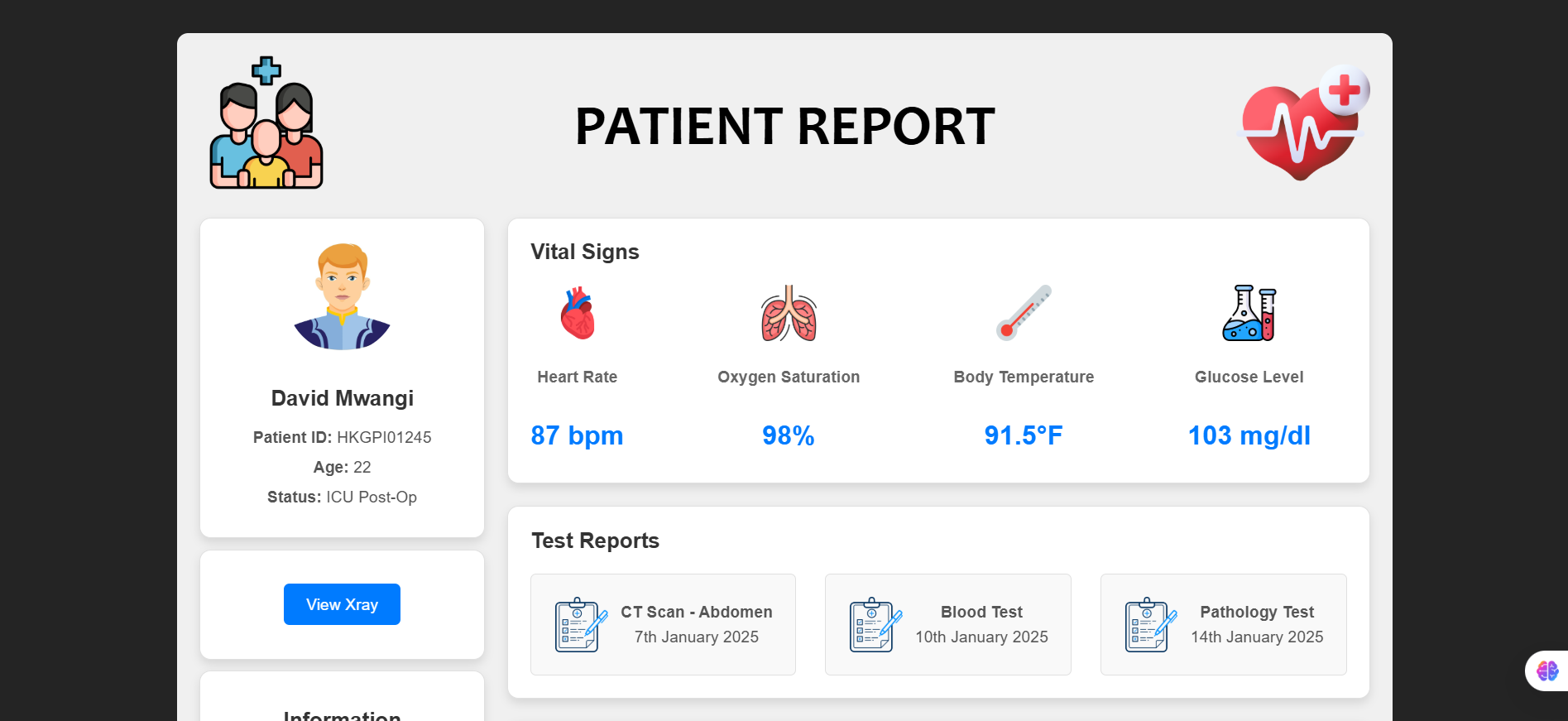

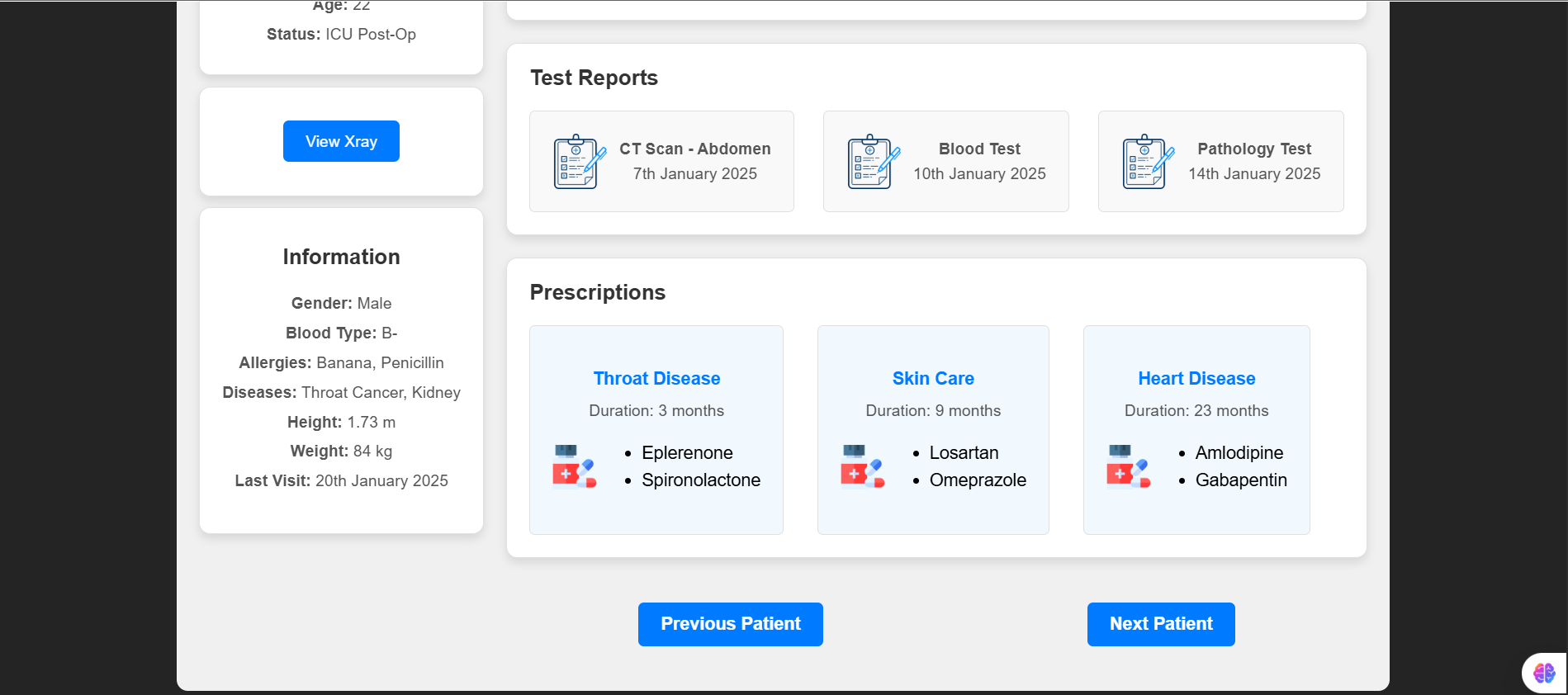

* Gesture-based interactions with medical applications.

* User-friendly dashboard for hands-free navigation.

3. High-Fidelity UI Design

The interface was designed with Tailwind CSS, ensuring a clean, professional, and intuitive experience for medical practitioners.

4. Development

* Frontend: Built using React, ensuring smooth and interactive UI.

* AI Processing: Developed using Python and MediaPipe, enabling gesture recognition through webcam-based tracking.

* Developed four core applications (Calendar, Kanban, Text Editor, Color Picker).

* Machine Learning Models: Trained to detect and interpret the five primary gestures accurately.

5. Testing

Rigorous testing was conducted to ensure:

* Accurate gesture recognition across different lighting conditions.

* Low latency for real-time responsiveness.

* Smooth compatibility with medical application workflows.

6. Launch

After deployment, continuous monitoring and updates were implemented to:

* Refine gesture recognition accuracy.

* Enhance UI/UX for seamless medical interactions.

* Optimize performance for real-time processing.

THE OUTCOME

The Gesture Control System successfully delivers a hands-free, AI-powered solution that enhances hygiene, efficiency, and accessibility in medical environments. By enabling medical professionals to interact with computers using only hand gestures, the system reduces cross-infections, improves workflow efficiency, and ensures a safe, sterile working environment.

With its cost-effective implementation and real-time AI capabilities, this system stands as an innovative step toward touchless healthcare technology.